For the past 10 months, the National Highway Traffic Safety Administration has been collecting data on crashes that involve the use of Advanced Driver Assist Systems (ADAS), also known as Level 2 semi-automated driving systems according to the SAE’s very confusing classification system. NHTSA actually put out two separate reports, one for Level 2 systems, (which require constant driver attention and are commercially available today) titled “Summary Report: Standing General Order on Crash Reporting for Level 2 Advanced Driver Assistance Systems” and one called “Summary Report: Standing General Order on Crash Reporting for Automated Driving Systems” for Level 3 and up systems, which are currently still in development and are intended to be true automated driving systems that do not require a driver’s attention. These reports prompted a number of news outlets to mention Tesla, mostly because the Level 2 report mentions that the company has been involved in more L2 ADAS crashes than any other automaker, by a significant margin. This sounds pretty bad for Tesla, but this is also one of those cases where a lot more context is necessary to really assess what’s going on.

Before I go into this report – I’m just looking at the Level 2 one now, because the full ADS one deals with situations too different to be compared together– I should make clear where I personally stand when it comes to these systems: I think L2 driver assist systems are inherently flawed, which I’ve explained many times before.

My opinion on L2 is that it is always going to be compromised, not for any specific technical reasons, but because these systems place humans into roles they’re just not good at, and introduce a paradox where the more capable a Level 2 system is, the worse people tend to be at remaining vigilant, because the more it does and the better it does it, the less the human feels the need to monitor carefully and be ready to take over. So the fundamental safety net of the system, the human that’s supposed to be alert, is in some ways, lost.

I’m not the only one who feels this way, by the way; many people far smarter than I am agree. Researcher Missy Cummings goes into this phenomenon in detail in her paper Evaluating the Reliability of Tesla Model 3 Driver Assist Functions from October of 2020:

“Drivers who think their cars are more capable that they are may be more susceptible to increased states of distractions, and thus at higher risk of crashes. This susceptibility is highlighted by several fatal crashes of distracted Tesla drivers with Autopilot engaged (12,13). Past research has characterized a variety of ways in which autonomous systems can influence an operator’s attentional levels. When an operator’s task load is low while supervising an autonomous system, he can experience increased boredom which results in reduced alertness and vigilance (ability to respond to external stimuli) (14).”

With that in mind, let’s look at some of the data NHTSA found. It’s worth starting by looking at how the reported crashes were categorized:

Observations from reported crashes of Level 2 ADAS-equipped vehicles are presented in this section using data reported through May 15, 2022. It is important to note that these crashes are categorized based on what driving automation system was reported as being equipped on the vehicle, not on what system was reported to be engaged at the time of the incident. In some cases, reporting entities may mistakenly classify the onboard automation system as ADS when it is actually Level 2 ADAS (and vice versa). NHTSA is currently working with reporting entities to correct this information and to improve data quality in future reporting.

So, this is a little confusing: crashes are categorized by the ADAS system the car was equipped with, and not necessarily what was reported as being active at the time of the crash? Also, the mistake made by “reporting entities” of calling Level 2 ADAS semi-automated systems as “ADS,” or full self-driving systems (Automated Driving Systems), is a good reminder about how much public education still remains to be done regarding these systems.

The report also seems to contradict this a bit, as the criteria for what crashes get reported must include a Level 2 ADAS system being active at any time within 30 seconds of the crash (emphasis mine):

The General Order requires that reporting entities file incident reports for crashes involving Level 2 ADAS-equipped vehicles that occur on publicly accessible roads in the United States and its territories. Crashes involving a Level 2 ADAS-equipped vehicle are reportable if the Level 2 ADAS was in use at any time within 30 seconds of the crash and the crash involved a vulnerable road user or resulted in a fatality, a vehicle tow-away, an air bag deployment, or any individual being transported to a hospital for medical treatment.

I think this means that the crashes only get reported if a Level 2 system was active within 30 seconds of the incident, but the categorization may just be organized based on the installed equipment. At least, that’s what I think it’s saying.

Let’s go right for the most headline-tempting chart, the total number of crashes by car brand using Level 2 Advanced Driver Assist Systems:

So, obviously Tesla looks pretty bad here, with 273 crashes, over three times the next closest carmaker, Honda, which is nine times more than the next one, Subaru, with 10 crashes. Does this mean that Tesla’s Autopilot and Full Self-Driving Systems (FSD) are significantly more dangerous than everyone else’s Level 2 systems?

Well, as you likely guessed, it’s not all that simple. This chart of number of crashes doesn’t tell us the total number of miles driven or how many cars are equipped with these systems, or what percentage of drivers actually use them, and without any data like that, we really can’t make an assessment if the Tesla system is really three times as dangerous as, say, Honda’s systems, which we in turn can’t say if those are nine times more crash-prone than Subaru’s and so on.

Now, I do have some theories about what’s going on here, and why Tesla’s numbers are so high. Compared to all the other automakers on this list, Teslas are more likely to have some form of L2 driver assist systems, since all Teslas come with the basic Autopilot system, and similar systems are still more likely to be optional on other cars.

So, I think there are simply more L2 ADAS-capable Teslas on the roads, percentage-wise, than other cars, and, equally importantly, I think Tesla drivers have two cultural traits that factor in here: There’s much more focus in the Tesla community – hell, there’s more of a community, for better or worse, period, for Tesla owners than, say, for Hyundai or Honda owners – and that community pays a lot of attention to these sorts of driving automation systems, which makes them more likely to use them, and more likely to use them more often, and in more situations and driving conditions where a Toyota or Volvo or whatever owner might just drive normally.

This same culture is also one that encourages a lot of faith in an almost cult-like belief in an ongoing “mission” to achieve self-driving, and from there, a still-mythical generalized Artificial Intelligence, and this sort of focus and faith can lead L2 users to attribute more capability to their cars’ ability to drive than the car is actually capable of, which in turn leads to a lack of proper oversight and attention, which leads to crashes.

There’s also the possibility that Tesla’s data-logging and reporting capabilities, which are pretty extensive, may be a factor in how many crashes are reported. As the report states that carmakers that build in more advanced telematics and reporting systems will by their nature provide more data and communicate better, and cars with less advanced telematics and data logging capability may simply not be recording or reporting all incidents:

Manufacturers of Level 2 ADAS-equipped vehicles with limited data recording and telemetry capabilities may only receive consumer reports of driving automation system involvement in a crash outcome, and there may be a time delay before the manufacturer is notified, if the manufacturer is notified at all. In general, timeliness of the General Order reporting is dependent on if and when the manufacturer becomes aware of the crash and not on when the crash occurs. Due to variation in data recording and telemetry capabilities, the Summary Incident Report Data should not be assumed to be statistically representative of all crashes.

For example, a Level 2 ADAS-equipped vehicle manufacturer with access to advanced data recording and telemetry may report a higher number of crashes than a manufacturer with limited access, simply due to the latter’s reliance on conventional crash reporting processes. In other words, it is feasible that some Level 2 ADAS-equipped vehicle crashes are not included in the Summary Incident Report Data because the reporting entity was not aware of them. Furthermore, some crashes of Level 2 ADAS-equipped vehicles with limited telematic capabilities may not be included in the General Order if the consumer did not state that the automation system was engaged within 30 seconds of the crash or if there is no other available information indicating Level 2 ADAS engagement due to limited data available from the crashed vehicle. By contrast, some manufacturers have access to a much greater amount of crash data almost immediately after a crash because of their advanced data recording and telemetry.

There’s also the issue of usable domain; GM’s SuperCruise, for example, is designed to only operate on roads that have been mapped and approved for use by GM, where other systems, like Tesla’s Autopilot, are not limited this way.

As a result, there are just fewer places for a GM L2 ADAS vehicle to operate, and the places that it can likely have a greater margin of safety because they have been pre-vetted. So, does that mean that SuperCruise is safer than Autopilot? You could read it that way, but it’s still not really and apples-to-apples comparison.

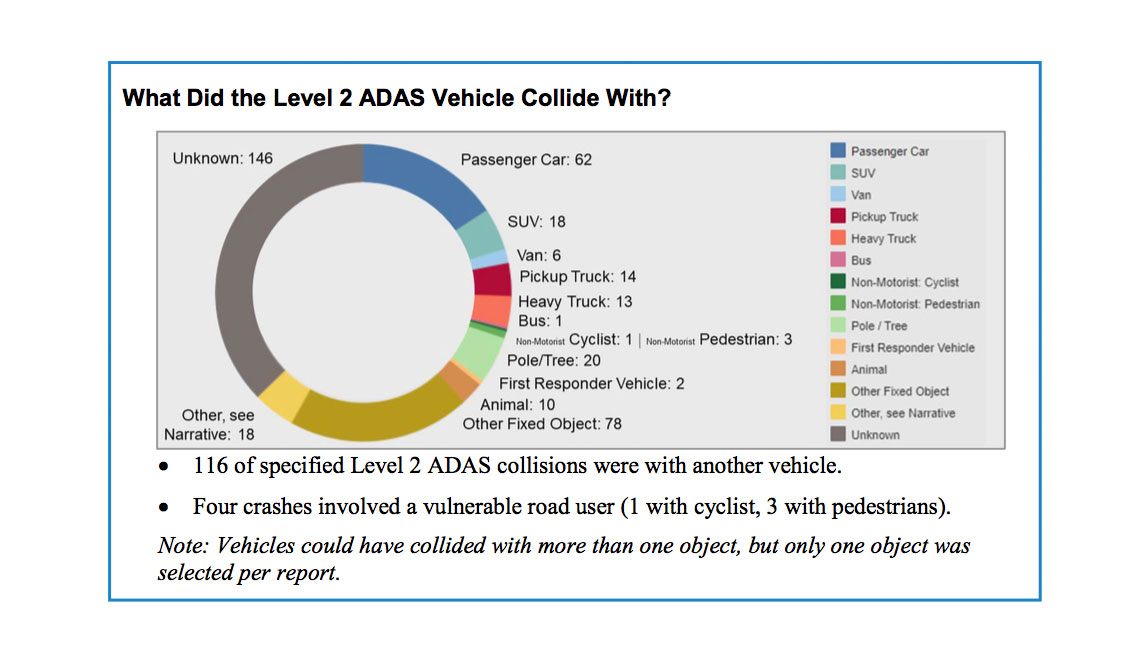

Also interesting in the report is what the cars actually crashed into:

Passenger cars are, of course, at the top of the list, but the largest category is “unknown,” which sounds pretty mysterious. First responder vehicles are only listed twice, which is notable because NHTSA already is investigating Tesla L2-related crashes with first-responder vehicles in another study, and that one notes 14 crashes.

Also very telling is this chart that logs where the L2 ADAS-equipped vehicles were damaged:

Crashes involving L2 ADAS vehicles tended to be most commonly damaged in the front, which suggests that its the L2 vehicles that are crashing into things, as opposed to being crashed into. Maybe it’s my own biases, but I feel like this data supports the basic issues I see with L2 systems, that of driver inattention.

Crashes involving L2 ADAS vehicles tended to be most commonly damaged in the front, which suggests that its the L2 vehicles that are crashing into things, as opposed to being crashed into. Maybe it’s my own biases, but I feel like this data supports the basic issues I see with L2 systems, that of driver inattention.

If most of the wrecks are caused by running into things, causing damage to the front of the car, then that would seem to suggest that drivers, perhaps lulled by their L2 systems doing the bulk of the driving for long, uneventful periods, are not being attentive to what the cars are always driving toward. In cases where the ADAS system disengages without warning or, arguably worse, doesn’t realize that it should disengage because it is making poor driving decisions, and the driver’s attention and focus have been eroded by an otherwise effective system doing most of the work, then wrecks caused by the car running into other cars or immobile barriers or other things seems a likely result.

This report is interesting, but also very incomplete, and I think it’s premature to use it to make any really sweeping generalizations about what brands of cars have more or less dangerous Level 2 driver assist systems. I think the one thing we can take from this report is that these systems are by no means flawless yet, and are no substitute for actually paying attention to the road, no matter what the hype suggests.

It’s wise for NHTSA to keep formal tabs on all of this, so I think this is a good start. I’ll be curious to see future reports that may be able to provide more data for a clearer picture of what is actually going on.

I’ve actually started using the lane centering in my Honda to keep me awake on long drives.

How you ask?

Well, as an example, yesterday it dodged left in an attempt to spear me into a temporary barrier on a lane under construction.

Exhilarating! I’m wide awake now, thank you!

My wife bought a Subaru with their self driving thing. It was the only car left at the dealership. I borrow her car occasionally. The self steering thing seems to keep turning itself on.

It’s really only noticeable when for some reason there are a lot of random lines painted on the road sort of in the direction of traffic. Or seams on the pavement. Or old railroad tracks. Or the street is 5 lanes on one side of the intersection and 6 lanes on the other. Or graffiti or all that stuff that conEd paints on the street before they rip it up.

In New York City, in other words, it’s all the goddamn time. In fairness the manual probably goes on for 30 pages about this but when the car lunges for the breakdown lane or just randomly decides that you aren’t in a real lane and should be 5 feet to the left or right you don’t have the sort of quality time to even figure out wtf it’s called much peruse the index to find where it is covered in the manual.

Is that what they are comparing this to?

I have many questions about the Honda numbers. I own one and the lane centering works great until it doesn’t.

It also hits the brakes randomly and accuses me of needing coffee at moments when I’m actually hyper aware of what’s happening. (Right after the lane centering nearly side swipes a truck)

Well not really helpful and not the least bit funny.

I have been driving a Tesla with the original “autopilot” system (which did not use cameras) for many years. It is truly fantastic for very long trips and for bumper to bumper traffic. The big issue with Tesla’s system is the name. They keep stubbornly marketing it as “autopilot”, when it should be called “copilot”.

The system is great at helping you drive the car, but the car is never driving itself. You are driving the car, the computer is just taking away some of the effort. I don’t have radar inside my meaty head, so knowing that the car is double checking my environment with one is rather reassuring. This is more than enough to make rush hour or boring freeway miles a lot less tiresome.

In a way, it is a bit like riding a horse. You can generally trust the horse with safely taking you in the direction where you want it to go, but no matter how smart the horse, this doesn’t mean that you can go to sleep.

I don’t believe there would be a precipitous drop in demand should they change the name to “copilot”, and people would approach the system with a much healthier attitude. But unfortunately it seems that this ship has long sailed…

I’ve never heard anyone suggest that name before. I think it’s perfect

Ford’s suite is called Co-Pilot360, so maybe too similar sounding?

I like the horse analogy. So, whenever/if-ever someone perfects an truly fully automated intelligent driving system, they absolutely should call it “Jolly Jumper”.

!! I grew up on a ranch & rode horses from early childhood to when I was about 30 years old when we sold the ranch. Horses are always trying to kill their riders, mostly by accident, sometimes intentionally. Clusterphuques usually happen when you are just riding along daydreaming. Automated driver assist seems a lot like horses.

And did you pay an extra $10,000 for the horse to try to kill you?

Horses are nowhere nearly as bad as ponies. Ponies with their short little necks that are very resistant to influence by the rider’s reins, And they’re apparently small brains devoted exclusively to running as fast as possible from one obstacle just big enough for a pony to fit through to the next with low branches in between. If the branch isn’t low enough maybe running under it and a small jump would help.

Wow, I’ve not seen the horse analogy before, but it’s quite a good one!

Well thank you for the recap rhat has been written and posted for the last few years. Did you think this was new or news?

My wife and I have had our first Tesla, model y long range, for 3 weeks now.

The level of bullshit around these cars is amazing. I’m not a fanboy, my wife knew basically nothing about Tesla as she ignores my ramblings, rightfully so.

She’s never had such a good experience with a car purchase, or a car, like the Tesla.

I completely agree. I will never buy another car from a dealership, or an ice car, again. I have a 2013 Mustang GT/CS, 2013 BRZ, 2017 Fiesta ST, 2009 Yaris (lol), 2010 Lexus RX350, and a 2006 Ram Mega Cab.

The basic autopilot is amazing, compared to all the other adas systems I’ve driven (never Chevys), and even the phantom breaking is “awesome” in that it is CAUTIOUS and overly safe.

These graphs and observations are obviously useless since they don’t include miles driven and percentage of each manufacturer, at the least. But from my experience so far, only a truly stupid person would allow adas systems to get them in trouble. Customer education as an excuse is bullshit.

Every single human that buys any product risks killing themselves with it, if they don’t understand how that product works. I’m sorry, but this is on the purchaser alone. Assuming the manufacturer includes proper documentation of course.

Adas is only “inherently flawed” if you expect the worst from people. Personally, I’m with Darwin on this subject.

To be fair, the Tesla shop in SLC wasn’t wonderful, guy wanted to get rid of us real quick, had to schedule the actually delivery, a few fit and finish issues we need to resolve. But it was 100 times better than any dealership experience I’ve had. The BS was zero. Almost everything done online, never spoke on the phone even, just a few texts.

Communications were less polite than expected, very curt, to be clear, but nobody tried to sell me vin etching!!!

Let’s than 15 minutes for actual “transaction” at the store.

If you ended up buying a Tesla you were fed BS. Otherwise them telling you our autopilot doesn’t work and if you use it you will die. Our QC will give you a poorly built car built with crap parts and NR engineering. Any repairs will probably require towing to other states and wait a few months.

AND YOU BOUGHT IT?

What on earth are you going on about? This was no Tesla zombie purchase. I know the options available to me and decided the Tesla was my best choice.

It has been wonderful so far, without any inkling of trying to go all SkyNet on my ass…

Regarding your Darwin comment:

If someone gets themselves killed doing something stupid, I’m with you, I don’t pity them. But what about the family they t-boned? People that don’t have a Tesla have died because the Tesla driver used “AutoPilot” unsafely.

And the reason they used it that way is because they were sold a lie by Tesla. Now, on principle, I believe people should be smart enough to know companies lie if it makes them a buck. But in practice, that’s not how the world works. Tesla is scamming people into being beta testers of unproven technology, and everyone on the road is being dragged into it unwillingly. Tesla has blood on their hands, because they approach this technology in an uncaring and unethical manner that no other OEM does. How they haven’t been sued to death in such a litigious country I don’t know.

These numbers are exceptionally useless and not just because of the lack of context.

For instance, Toyota just launched it’s first level 2 system on the Lexus LS500h (already a super low volume car) and that only stated getting in customers hands last month. If they have had 4 crashes that’s like, all of them.

I will never own a Tesla as long as Elon “Pedo Guy” Musk is involved in any way. Their terminology is deceitful and in general they seem like an awful company. Having said that, as a former math teacher that taught about misleading statistics that first graph is 100% garbage for so many reasons. It’s the clickbait of bar graphs and anyone using that for any sort of meaningful analysis is an idiot.

According to the Bureau of Transportation statistics there are about 6 million car crashes in the USA per year. Without knowing the total number of cars that are using Level 2 driver assistance this data is not very useful. I could look at the data for deaths by activity and see that BASE jumping only has 150 deaths per year while driving has 40,000. Does that mean driving is more dangerous than BASE jumping? Nope. Some researchers have estimated that with BASE jumping 1 person out of 60 will die in any particular year which is 1000s of times more dangerous than driving.

Many people believe Tesla Level 2 is widely used, easily abused, and infrequently makes mistakes. I am one of them.

While the bar graph in the article can easily be used for confirmation bias, it is statistically worthless without crashes per mile, road types, more crash details, or even definitive data that the system was in operation immediately before the crash. The only really useful thing about this report is that it points out that the companies and government agencies are not collecting enough of the right type of information.

Well if you actually read more than this article you would know they drive into parked semi trucks, ambulance and cop cars during emergency calls with their emergency lights going. Yeah why do the emergency vehicles always pop up in the middle of the road.

I am interested in the report with the full details because the standard AutoPilot on Teslas do not stop at stop signs or stop lights. It’s just Traffic Aware Cruise Control and Lane Keep Assist. There was a story recently where someone using it ran into an intersection and smashed into another car. This would be no different than just smashing into a car with cruise control on. How much of this was just someone failing to intervene when they are supposed to vs a failure of the systems of the vehicle across all makers. I read about people using autopilot on city streets which may mean they are running stop signs if they are not paying attention. There are also reports of Teslas smashing into gore points at an exit when the person is in the right lane. From my experience I am guessing there are plenty that were faults of the system by the larger number will be failure to intervene to stop the car. I am sure there are also some where the system hit the brakes and caused a car to rear end them with a phantom braking issue. All this does is improve these systems and provide guidance going forward. Perhaps Tesla should activate the camera to monitor the driver to ensure they are paying attention like Ford does. I still love my Tesla. Its fast, offers the same build quality as my Charger did which is ok at best, and has the best charging infrastructure for ease of use and reliability.